Biography

Hello 🎅🏼, my name is Jie Yuan. Welcome to my site.

I am a graduate of the Leibniz University Hannover program Navigation and Field Robotics specializing in Simultaneous Localization and Mapping(SLAM) and Computer Vision based on Deep Learning. Python (for DL) and C++ (for SLAM) are my principal developing languages, sometimes I use Matlab to verify algorithms.

I develop multi-sensor (camera, radar, IMU, GPS) perception and filter-based localization for mobile robotic. Besides, deep-learning-based scene understanding and reinforcement learning for automatic control is also a major in my study. My study program is combines production and learning - working in teams to produce practical applications supporting autonomous driving while applying fresh knowledge from study. My relevant areas are listed as following.

- HD Mapping

- Scene Segmentation

- Object Tracking

- Robotic Localization

- Robotic Perception

Please feel free to contact me 📧.

Technique Books

Here are books 📚 that I like reviews a lot during development:

- Probabilistic Robotics

- State Estimation for Robotics

- Multiple View Geometry in Computer Vision

- Deep Learning

- Pattern Recognition and Machine Learning

Projects

Those projects below was done during my master student meanwhile being research assistant.

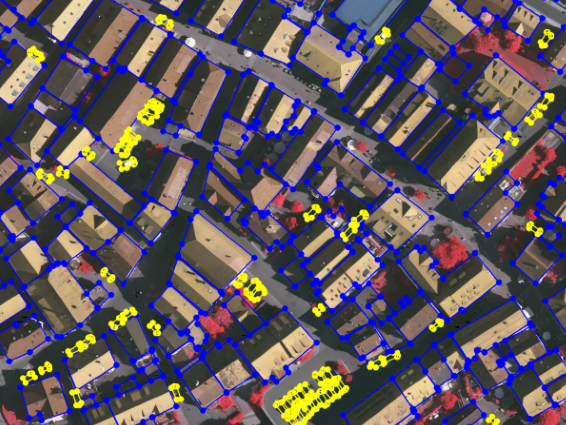

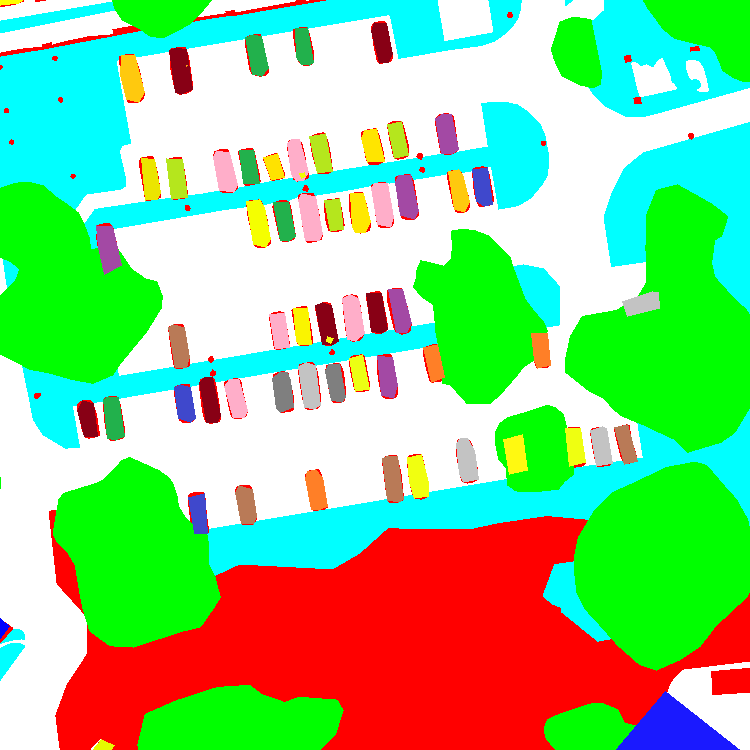

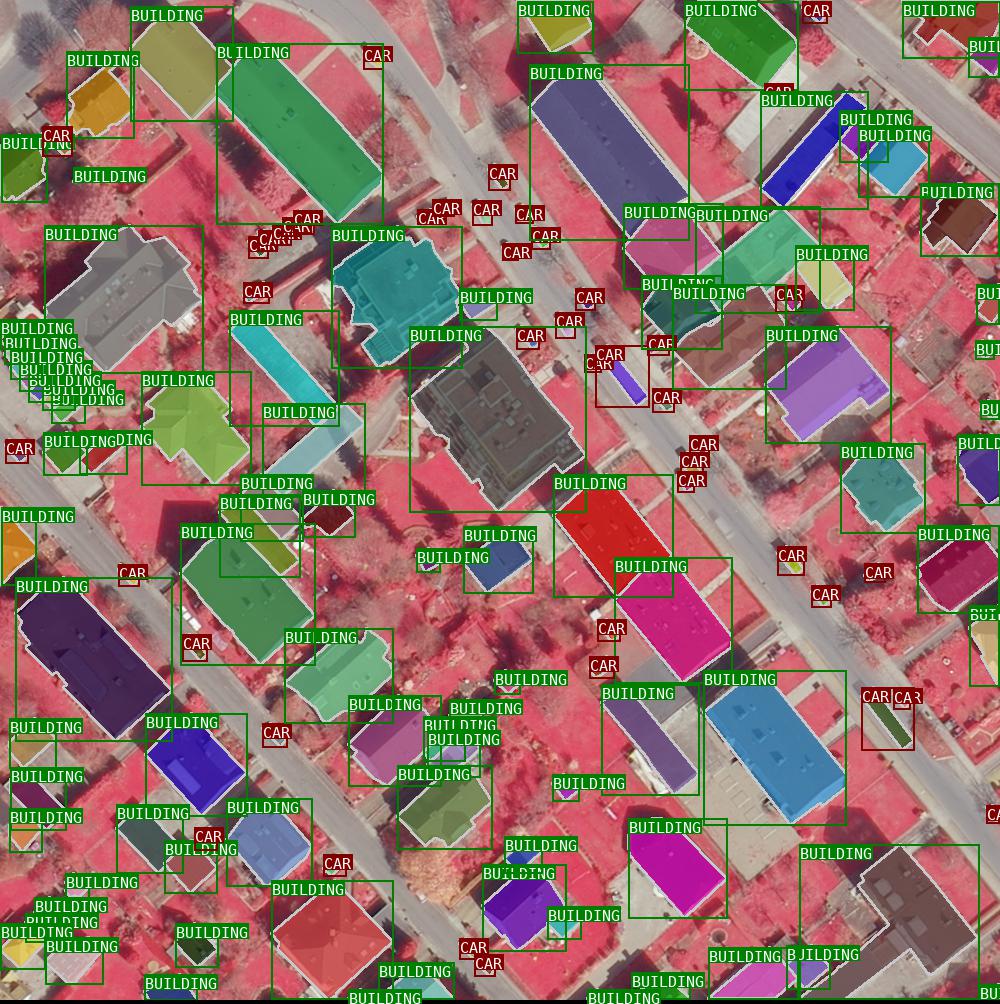

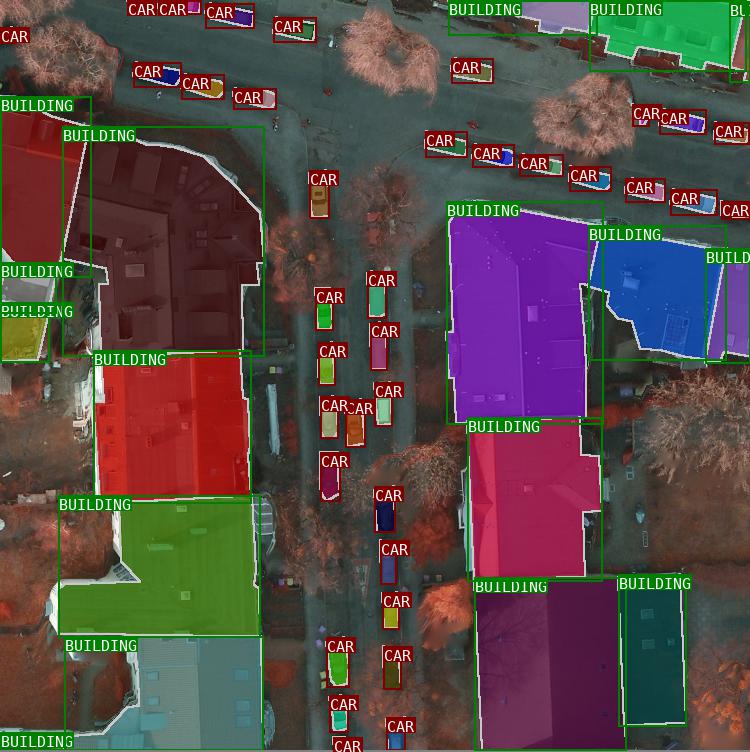

PanUrban Dataset - a panoptic dataset of aerial images(link)

PanUrban Dataset is a dataset with takes car and building as things and trees impervious surface etc. as stuff, locating on the city region of Vaihingen and Potsdam. Things denotes countable objects which can be seperated to be instances, but stuff is uncountable and more abstract, such as sky.

| Vaihingen sample | Potsdam sample |

|---|---|

|

|

Fig. Blue footprint encloses building instance, yellow footprint encloses car instance.

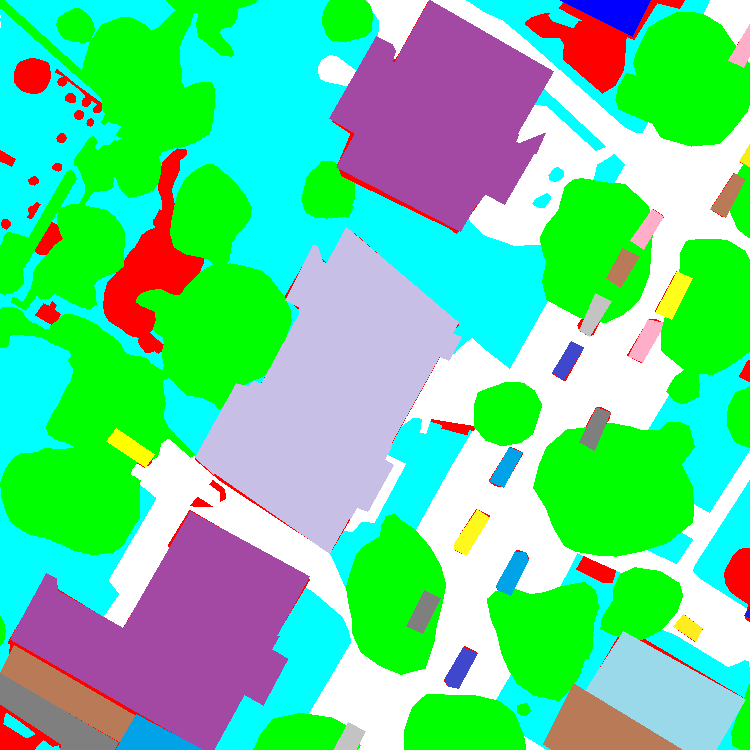

| Apartment | Factory | Innercity | Parking | Residual |

|---|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

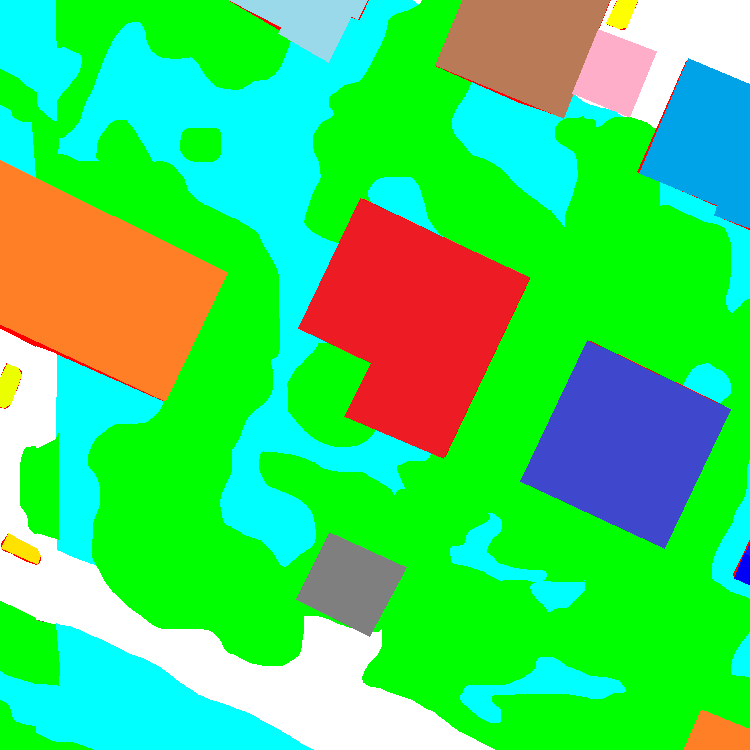

Fig. Dataset samples cross different city areas.

This aerial image dataset has the following properties:

- Orthophoto: Aerial Image dataset based on orthogonal(link) images with geospatial information, which can be directly used on measurement.

- Multiple Tasks: allows task for object detection, instance segmentation, semantic segmentation, and panoptic segmentation.

- Adjacent Buildings: unlike some datasets such as crowdAI, most buildings in our dataset are adjacent to their neighbors. In other words, it is dense distributed. thanks to the development of Instance Segmentation, the task to distinguish connected buildings is now possible.

- Full Range Augmentation: utilize features across source blocks to extract more robust features.

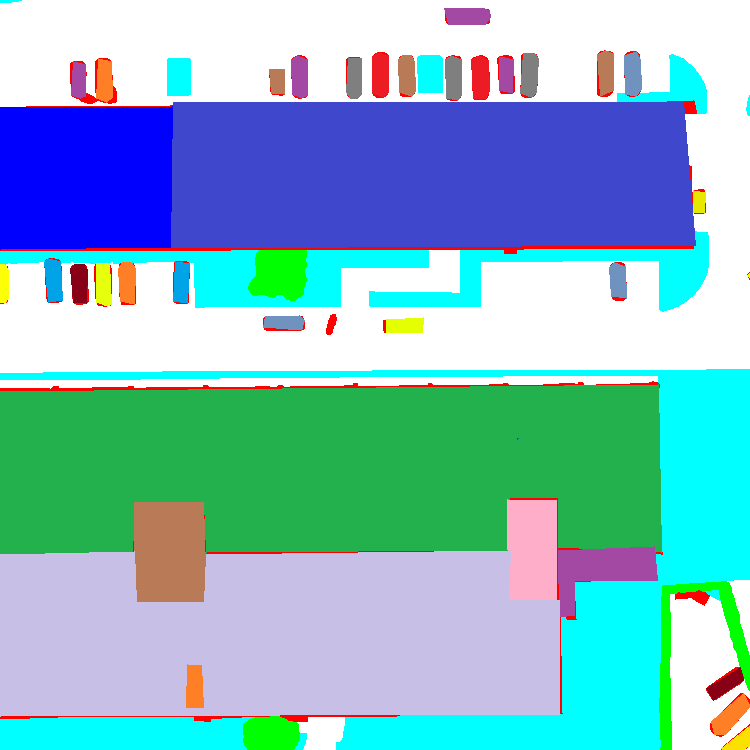

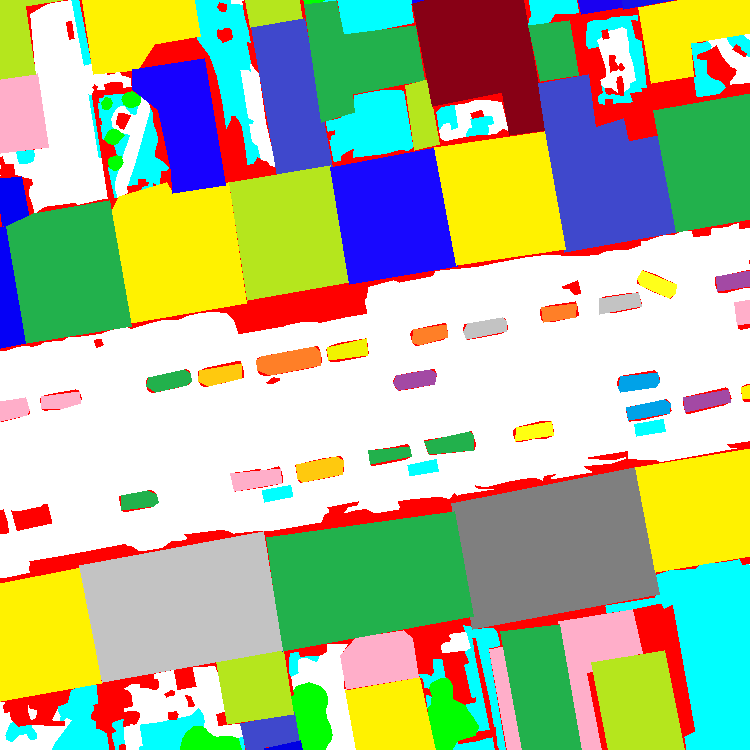

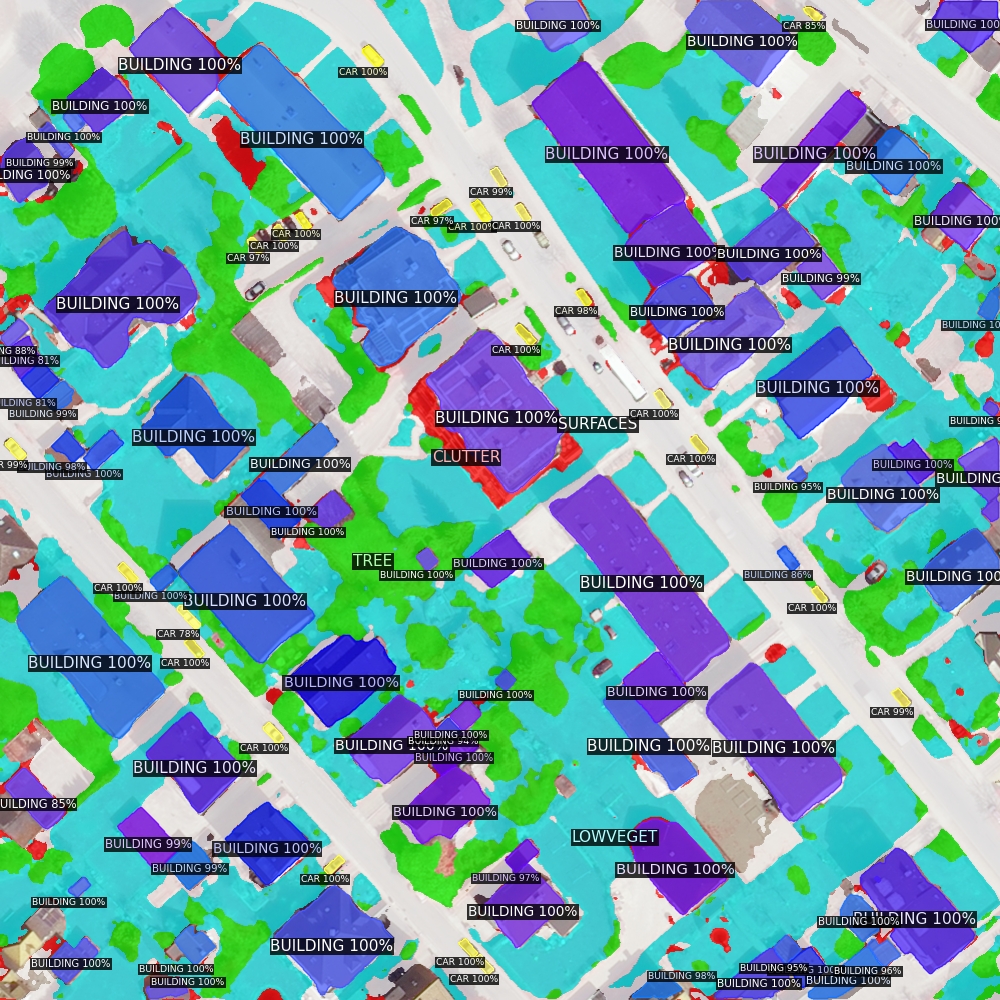

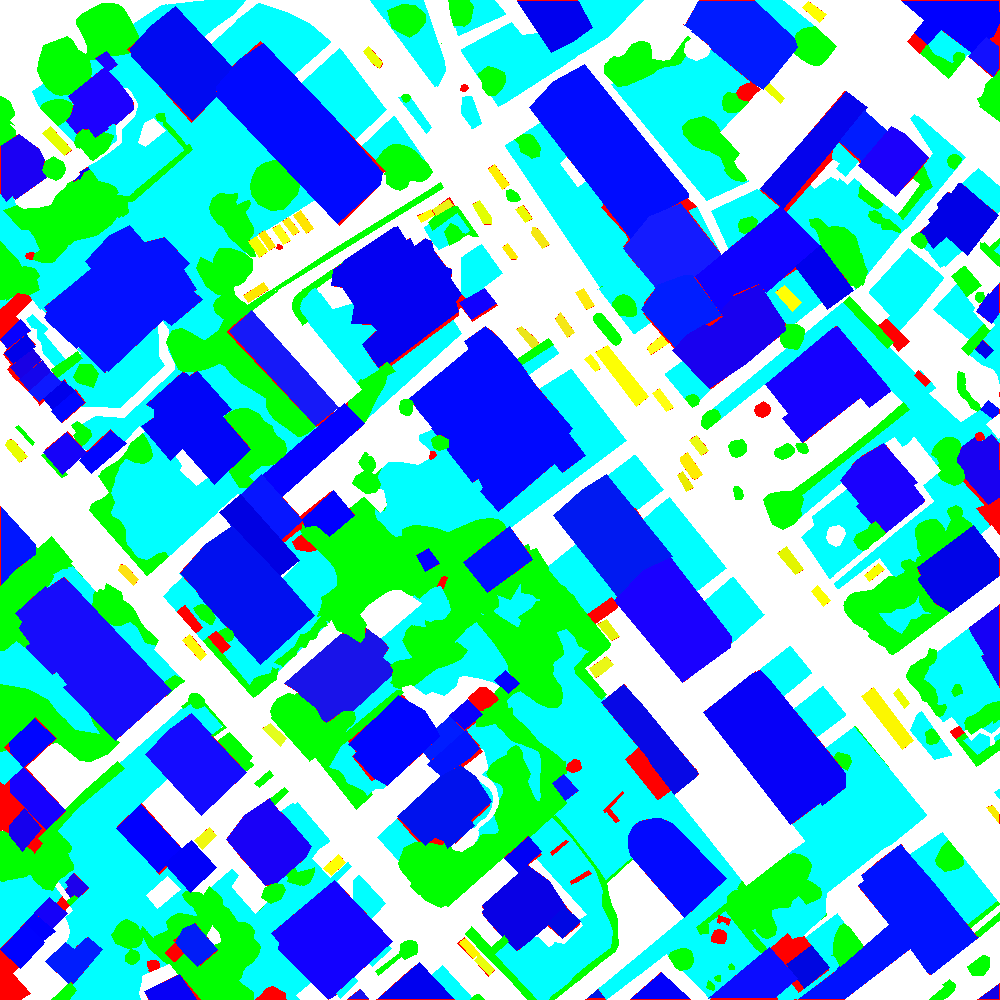

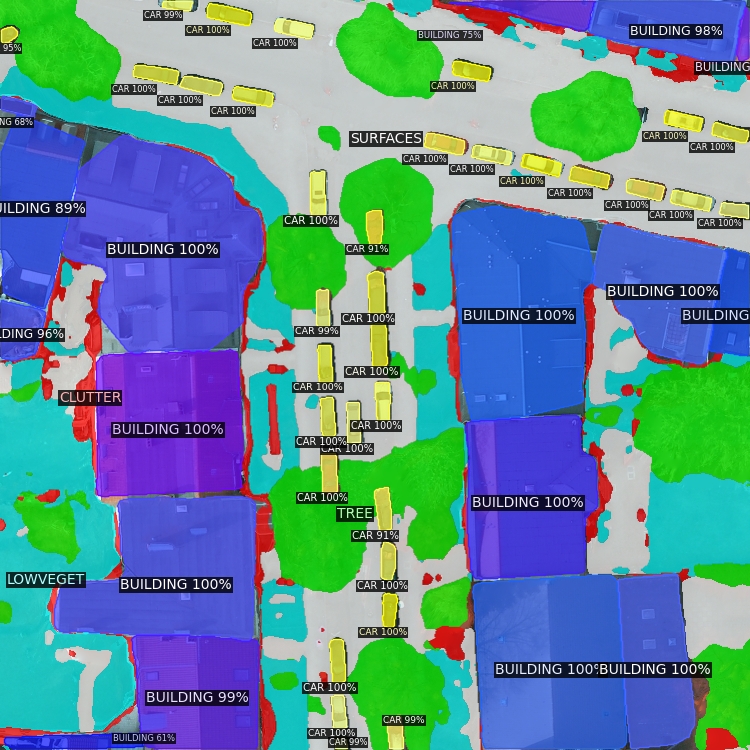

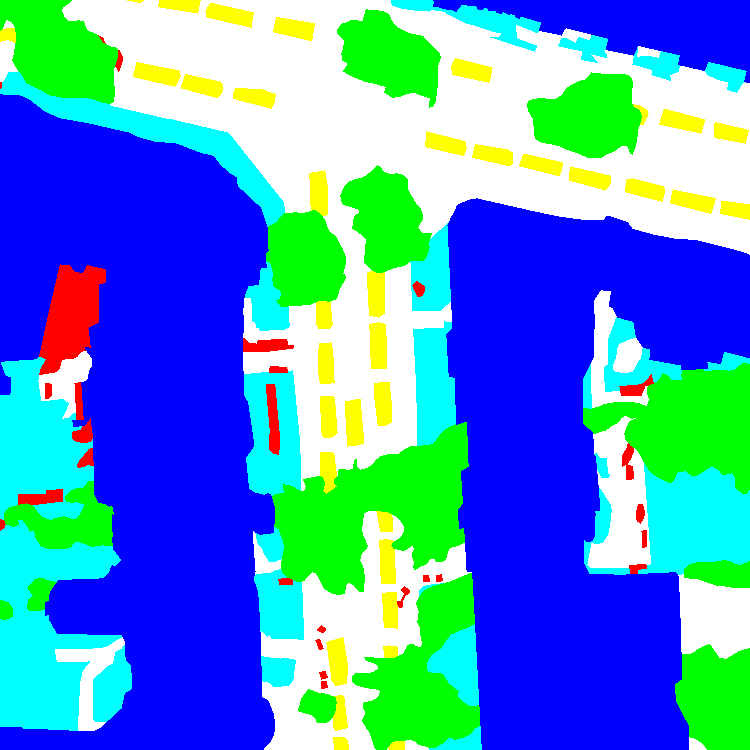

Panoptic Segmentation on PanUrban dataset

Take PanopticFPN as a baseline model, apply the model on PanUrban Dataset. Roatated bounding boxes are proposed and applied to fit rotated objects, such as buildings. The experiments result shows that rotated bounding box improve the result of instance segmentation, but not much on semantic segmentation.

| Panoptic Result | Semantic Label | Instance Label | Source Image |

|---|---|---|---|

|

|

|

|

|

|

|

|

Fig. Visualization of PanUrban dataset Prediction

Real-time HD Map Calibration with Multiple Lidar

- Prepare the measurement setup of 2 Lidars and a GPS receiver in a platform with a given calibration configuration. GPS could be used for localization, but here to synchronize the time of devices.

- Code a ROS package to input Velodyne-64 and Velodyne-16 raw data and pose with timestamps, output calibrated point cloud to map to real-world.

Techniques: C++, ROS, Sensor Calibration.

Dynamic Landmark based Visual Odometry(SFM)

Fig. 1 Visualization on Filtering of Matching points, below is the last frame, up is the current frame. Stereo images are aligned left and right. redpoints are removed.

- Extract feature points with multiple keypoint methods for comparasion, such as SIFT, Harris, SURF, ORB, FREAK, BRISK, etc.

- Filter false matching points via stereo matching in the RANSAC framework.

- 3D motion estimation with frame-to-frame matching.

- Structure and motion reconstruction, performance estimation(accuracy, efficiency).

Techniques: C++, Visual Odometry, Scene Reconstruction.

Object Tracking and Motion Prediction via UKF and EKF

Tracking and trajectory prediction of preceding cars.

Fig. Visualization of prediction of prededing cars, source: udacity

Given:

- Car detection & tracking algorithm via neural network.

- 3D active shape model(ASM) approximation method.

Task:

- Filtering of point cloud and feature point via predicted bounding box

- Point cloud clustering and dense matched feature points clustering.

- ASM matching and object localization, and object matching.

- Kalman Filtering(KF) vs. EFK vs. UFK w.r.t efficiency and accuracy.

Techniques: Python, Object Tracking, Kalman Filtering, Motion Estimation.

LEGO Courier Student Toy Project (SLAM)

LEGO Courier is located in an arena with lots of poles (forming a wall) and a short tunnel, poles and tunnel as well as destination are placed randomly.

| Internal Sensors | External Sensors |

|---|---|

| LIDAR | Camera |

| Ultrasonic Unit | |

| Odometry(broken) |

-

Calibration: The courier has to run in a consistent coordinate system, which means the camera and lidar need to be calibrated to a global coordinate system. This process is simplified to align all coordinates to the predefined 2D image coordinate system. A robot coordinate is accessed by a chessboard fixed in the courier body. Because the odometry of the courier is broken, visual odometry plays an important role.

-

Localization: Localization from the external camera is not accurate but enough for this scenario. A monocular camera has no scale estimation, although it can be approximated by cheeseboard grid size scale estimation. But it did not perform as thought. Finally, coordinates from the camera are simply triangulated with a fixed height parameter. Localization from LIDAR is initialized by coordinates from the camera but updated with local measurement of similarity transformation prediction via ICP algorithm. The location of the courier is determined by Kalman filtering.

-

Mapping: It was not allowed to take any extra ROS packages in this project. I applied a 2-dimensional grid map with a moderate resolution, simply visualized in the OpenCV window, which can also be seen in the video. Besides, a grid map makes path planning easier.

-

Motion Planning: A* algorithm with buffered/cost map.

-

Control: Iteration of going and turning.

Techniques: C++, ROS, SLAM, Image Processing, LIDAR Processing.

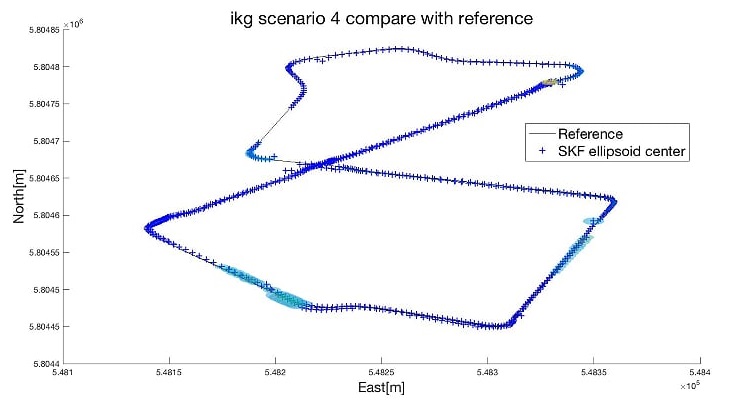

Trajectory Estimation with GPS + IMU based on Set-membership Kalman Filtering

Fig. 3 Visualization of predicted ellipsoids, the colored patch are the ellipsoids. The probability distribution is not visualized

Based on Paper: Geo-Referencing of a Multi-Sensor System Based on Set-Membership Kalman Filter.

The car is equipped with GPS and IMU sensor. In the conventional filtering method, the object is seen as a rigid body, and thus motion estimation is reduced as similarity transformation. However, non-rigid objects also need motion estimation, such as fluids in a ballon, which transforms itself when the water pressure changes. Uncertainty is seen as ellipsoid space surrounded by the probabilistic distribution. The application here is seen as a generalization test on a typical filtering scenario.

Techniques: Filtering

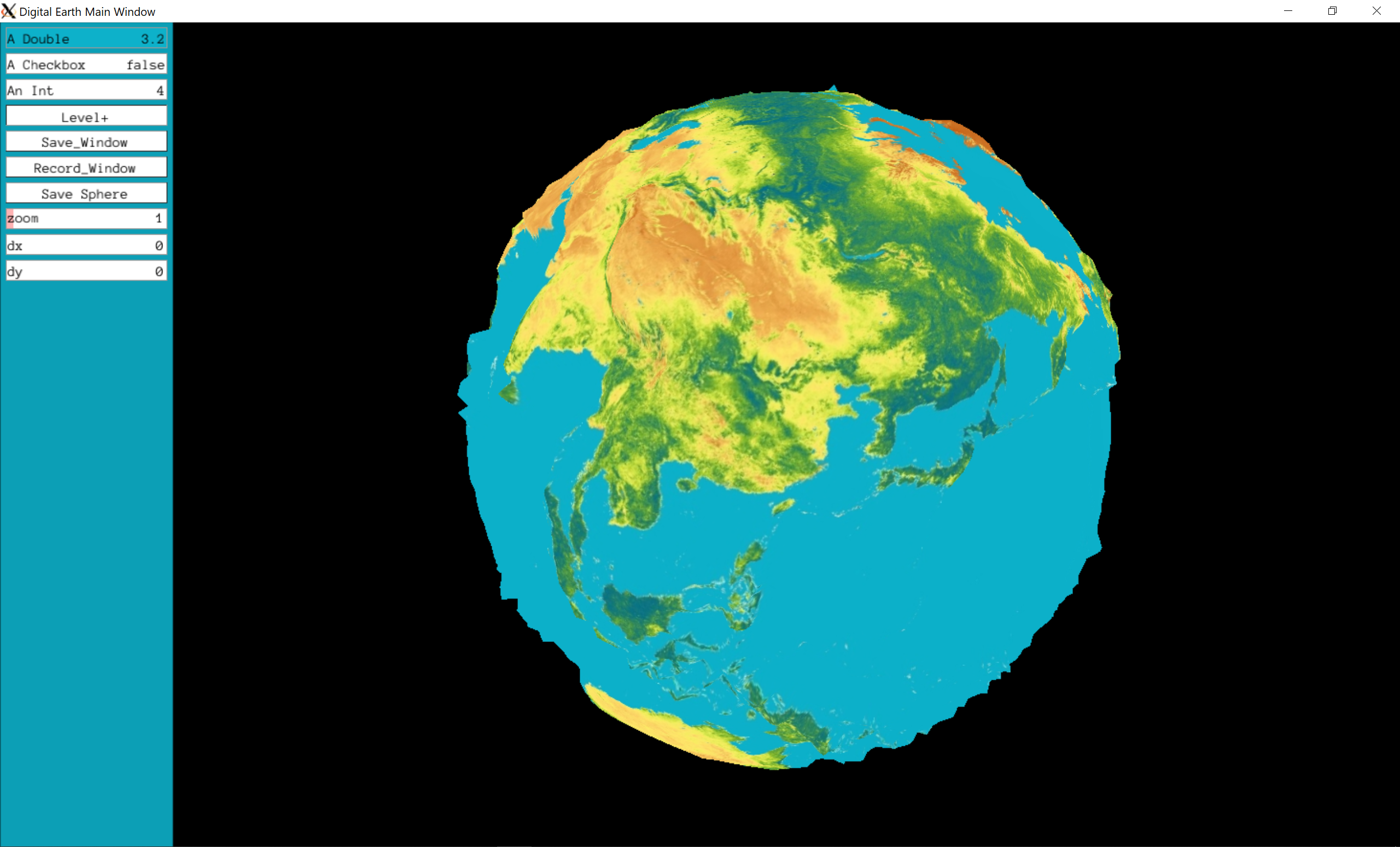

Digital Earth based on WMS link

Fig. 0 Digital Earth covered with DEM model

Fig. 0 Digital Earth covered with DEM model

This project is a educational project to simulates the elevation of the earth and the depth of the ocean etc. Earth scale grid techniques are apply to create an earth model. The sphere is initially based on the icosahedron and iteratively refined to a deeper level to sample the local elevation. It can also simulate the gravity field or any other data source.

Techniques: OpenGL, C++, Geographic Grid.

Leave a comment